Types of Evaluation

Last Updated: August 2022

1.) Formative Evaluation

A formative evaluation is conducted at the beginning of a new program or when an existing program is being modified or used in a new setting. It examines the feasibility, appropriateness, and acceptability of a program before it goes into implementation. A formative evaluation provides an insight into any modifications the plan needs before being implemented. This, in turn, will increase the likelihood of program success.

2.) Economic Evaluation

An economic evaluation helps assess the costs of a program relative to its effects. This can be a cost-benefit analysis or a cost-effectiveness analysis. Cost benefit analysis requires an estimate of the costs of undertaking a program and the estimates of its benefits. These costs and benefits are then translated into comparable monetary units. The alternative approach, cost-effectiveness analysis requires monetizing only the program costs. Its benefits are expressed in terms of the costs of achieving a given result.

3.) Process Evaluation

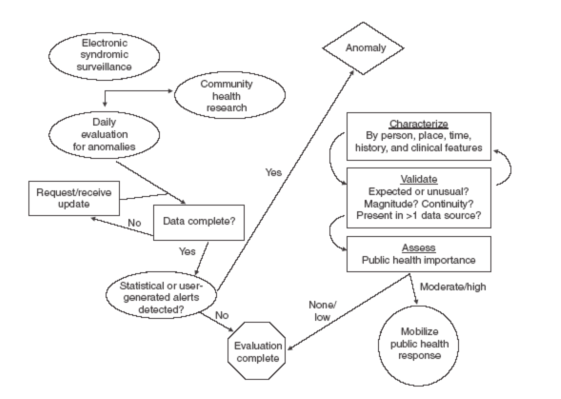

Process evaluation determines whether the plan is implemented as intended. It helps document and assess the activities being implemented under the program and consistency of these activities in different circumstances, from a program operation perspective and a service utilization perspective. Implementation of an OMAR plan can be evaluated in two ways:

- As a one-time process evaluation involving the staff and stakeholders.

- As a continuous monitoring of selected indicators to support the ongoing activities by providing feedback at a predetermined frequency or when needed.

Below is a framework that illustrates response protocols when using the ESSENCE syndromic surveillance system. This is an example of a continuous process monitoring to detect anomalies in the data by following a set protocol.

Source: https://www.cdc.gov/mmwr/preview/mmwrhtml/su5401a22.htm

Source: https://www.cdc.gov/mmwr/preview/mmwrhtml/su5401a22.htm

A successful process evaluation examines the following six components (Saunders, 2016):

- Fidelity – To what extent is the program implementation consistent with the established standards?

- Dose delivered – Are individual staff/organizations delivering the services they are responsible for?

- Dose received – To what extent are participants actively utilize the resources provided (we can measure just the initial use and/or continued use)?

- Satisfaction – Are participants satisfied with resources and with staff interactions.

- Reach – What percentage of the intended population has participated in the plan? Consider assessing barriers to participation for those who did not participate.

- Recruitment – What methods were used to approach participants and maintain their involvement?

Some examples of questions that a process evaluation for an OMAR plan can answer are:

- Does the public health agency have adequate number of qualified staff to perform the required functions?

- Does the public health agency coordinate effectively with community partners who are crucial to implementing the plan?

- Are the resources being used efficiently?

- Since some states have a centralized OMAR plan while others are decentralized, one question could be to understand the variation in performance between sites and addressing potential barriers, if any.

- Are partnering organizations satisfied with the interaction with each other and the overall functioning of the program?

4.) Outcome Evaluation

An outcome evaluation examines the progress in the outcomes the program intended to address (i.e., the right side of the logic model above). Outcomes are the program’s objectives for change and reflect the goals for the program set by the stakeholders. The challenge in conducting an outcome evaluation is translation of these goals and objectives into outcome measures that can be captured.

Like other programs, implementation of an OMAR plan can result in short-term, intermediate, and long-term outcomes. While long-term outcomes are considered to have the most practical importance, short-term and intermediate outcomes are the easier to assess and, during implementation, can provide early indication of whether the program is moving in the desired direction. Some examples of each of these outcomes are listed below:

Short-term outcomes

- Earliest possible identification of an overdose spike

- Timely communication of the situation to partners and public.

- Timely coordination of activities with partners.

- Earliest possible identification and investigation in case of an event that impacts the public.

- Ongoing implementation and evaluation of the plan.

Intermediate outcomes

- Improve data sharing within sector (public health, public safety, behavioral health)

- Improve OMAR plan process (communication, efficiency, etc.)

- Increase collaboration and data sharing

- Ongoing implementation and evaluation of the plan.

Long-term outcomes

- Reduce or prevent morbidity and mortality from overdose events.

- Better quality of life among individuals with a substance use disorder.

- Constantly evolving OMAR plan to better meet the needs of public.

Some important things to keep in mind when conducting an outcome evaluation are that outcomes can be affected by events which are independent of the program (what better example than the effect of COVID-19 pandemic on the implementation of OMAR plan) and the possibility of unintended program effects. An established way of identifying these is for the evaluator to have regular contact with the program staff and program participants, and to review prior evaluation research conducted in similar environments that can shed light on these.

5.) Impact Evaluation

Impact evaluation assesses the difference between the outcomes occurring with implementation of the program and those that would have occurred if the program was not implemented. The goal here is to determine whether the program has produced the desired effects in the target population. Therefore, impact evaluation has a huge potential to influence high-level decisions and is sometimes considered synonymous with program evaluation.

While the main question answered through impact evaluation is whether the program produced the desired effects, there are other questions that can be answered also. Some common questions that can be answered in the process of evaluating the impact of an OMAR plan are enlisted below:

- What is the difference in the desired outcomes that is attributable to the OMAR plan?

- Are there any unintended positive or negative consequences of implementing the OMAR plan?

- What is the impact of OMAR plan on outcomes for different subpopulations in the region where the plan is being implemented?

- Is there a chance that provision of higher quality services and more resources would result in better outcomes?

- Does OMAR plan implementation match with the intended plan for implementation?

- How does the fidelity of the plan vary across time, regions, and individuals (staff and/or population)?

- Is greater fidelity of implementation associated with larger program effects?

As it requires comparing the outcomes of the program to those that would have occurred in the absence of the program, impact evaluation can be challenging. While a randomized control design is the ideal choice for impact evaluation, there may be several constraints to evaluating an OMAR plan using this design. The impact of OMAR plan can be evaluated by comparing data on the same group of individuals before and after implementation of the plan. This could include all individuals residing in a particular zip code, county, council district or state. Another approach is to compare a region where the OMAR plan is being implemented to a region where it is not. However, these two regions should be comparable in ways that would produce same results for both regions if the plan was not implemented. Multiple comparison group designs such as these can be used to measure program effects by using approaches to reduce or eliminate potential bias. These can include multivariate regression analysis to statistically adjust the effect estimate for differences between the groups, using fixed effects designs to eliminate biases associated with difference between groups on characteristics that are stable among a group, etc.

References

- Logic models: A tool for effective program planning, collaboration, and monitoring 4-2016 (PDF) (ed.gov)

- Program Evaluation Guide – Introduction – CDC

- Types of Evaluation (cdc.gov)

- Framework for Program Evaluation – CDC

- Trofatter MO, Dolinky AK. Overdose Monitoring and Response Logic Model. March 2021.

- Syndromic Surveillance on the Epidemiologist’s Desktop: Making Sense of Much Data (MMWR)

- Rossi, Peter H., Mark W. Lipsey, and Howard E. Freeman. 2019. Evaluation: a systematic approach. Thousand Oaks, CA: Sage.

Toolkit Resources

Get more insights by using our toolkit resources.

Go to Resources

Glossary

Learn the definition of the key words being used.

Go to Glossary

Thanks

Thank you, to all of our contributors.

View our contributors

the public’s health.

Contact Us

Have any questions or recommendations, you can contact us at overdose@cste.org